Benchmark5

Hello today we measure the performance of the hinsightd web server against previous versions of itself and other common web servers. What we measure is the number of request each server can process for multiple concurrency points to see how it handles a high load and how fast it can process requests under stress.

For the moment the tests only measure static file serving because that's the easiest to measure reliably and that's also the focus of the server's development at this time. Stay tuned for reverse proxy and fastcgi benchmarks.

The three versions of the hinsightd server are all event-based with the 0.10 branch also being multi-threaded. The rest of the servers are mostly default instalations and only have minor changes from the default config to suit the test.

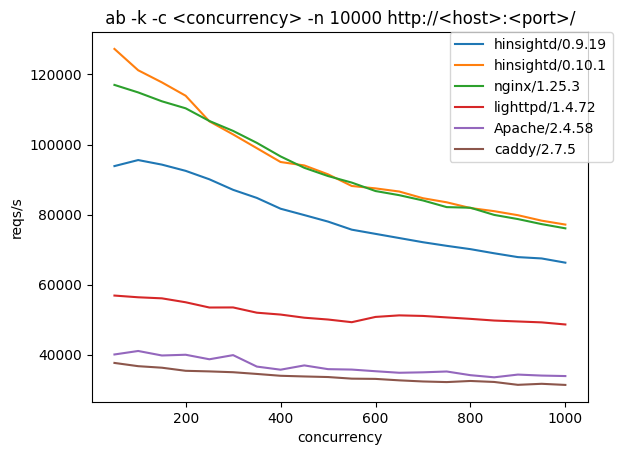

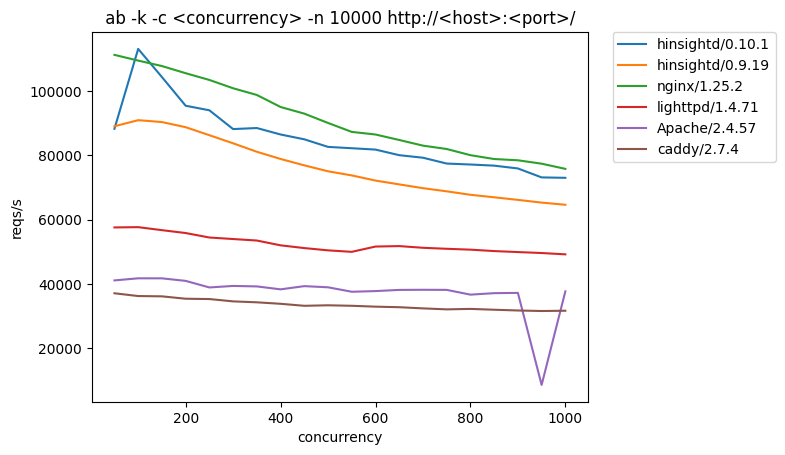

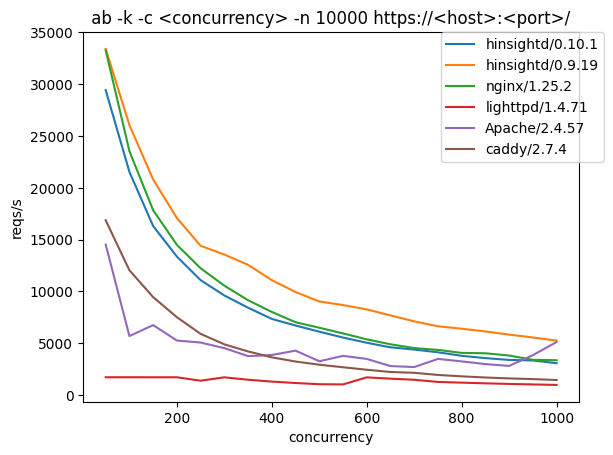

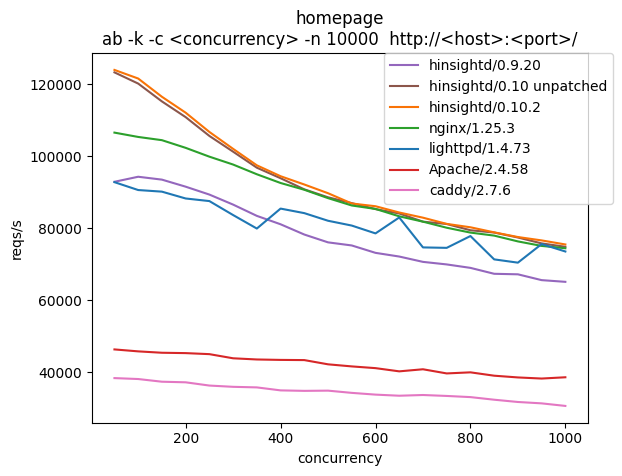

index.html

Target is a small 102 bytes file. This tests how fast a server can open a connection, parse headers, and close the connection. This benchmark measures how fast a server processes requests that don't involve any other bottleneck. While an ideal case it is worth mentioning that hinsightd keeps up and even beats the fastest servers tested.

| test | hinsightd/0.9.20 | hinsightd/0.10 unpatched | hinsightd/0.10.2 | nginx/1.25.3 | lighttpd/1.4.73 | Apache/2.4.58 | caddy/2.7.6 |

|---|---|---|---|---|---|---|---|

| ab -c 50 | 92944.58 | 123365.41 | 124046.39 | 106615.49 | 92842.75 | 46338.77 | 38366.36 |

| ab -c 100 | 94345.85 | 120250.12 | 121654.5 | 105412.96 | 90651.97 | 45797.61 | 38107.58 |

| ab -c 250 | 89390.27 | 105693.72 | 106805.66 | 99929.05 | 87581.01 | 45026.18 | 36292.24 |

| ab -c 500 | 76104.66 | 88635.2 | 89766.61 | 88397.79 | 82109.9 | 42196.05 | 34878.33 |

| ab -c 750 | 69969.7 | 81203.76 | 81240.71 | 80171.25 | 74577.33 | 39665.23 | 33404.04 |

| ab -c 1000 | 65129.18 | 74870.47 | 75520.14 | 74467.93 | 73565.65 | 38595.88 | 30630.78 |

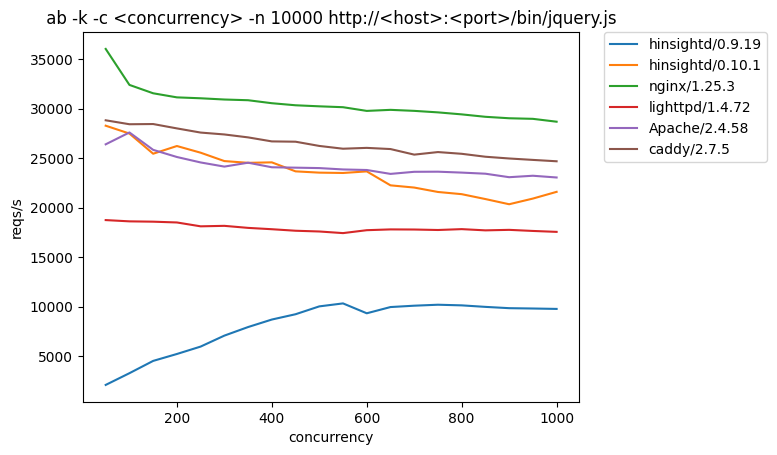

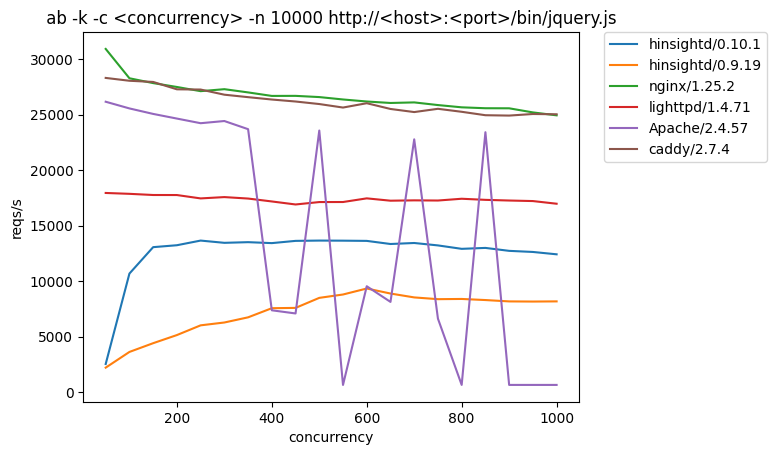

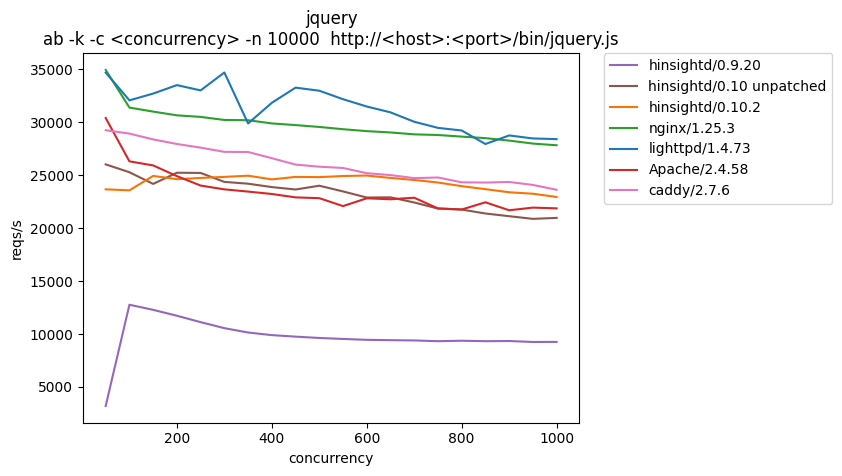

jquery.js

This target is a medium sized 89.7kB file with sendfile support disabled, this is primarily a disk bandwidth test. The low results compared to all the other servers are are still a work in progress.

| test | hinsightd/0.9.20 | hinsightd/0.10 unpatched | hinsightd/0.10.2 | nginx/1.25.3 | lighttpd/1.4.73 | Apache/2.4.58 | caddy/2.7.6 |

|---|---|---|---|---|---|---|---|

| ab -c 50 | 3210.75 | 26010.78 | 23656.27 | 34926.93 | 34674.42 | 30386.36 | 29233.18 |

| ab -c 100 | 12762.0 | 25267.33 | 23560.4 | 31371.56 | 32054.57 | 26297.66 | 28916.61 |

| ab -c 250 | 11118.68 | 25201.93 | 24729.22 | 30492.17 | 32994.04 | 24009.72 | 27589.63 |

| ab -c 500 | 9625.7 | 23997.79 | 24807.19 | 29544.02 | 32963.92 | 22820.63 | 25792.07 |

| ab -c 750 | 9319.79 | 21840.07 | 24299.03 | 28783.02 | 29456.56 | 21854.96 | 24772.4 |

| ab -c 1000 | 9253.43 | 20960.05 | 22934.04 | 27815.02 | 28395.05 | 21859.79 | 23612.47 |

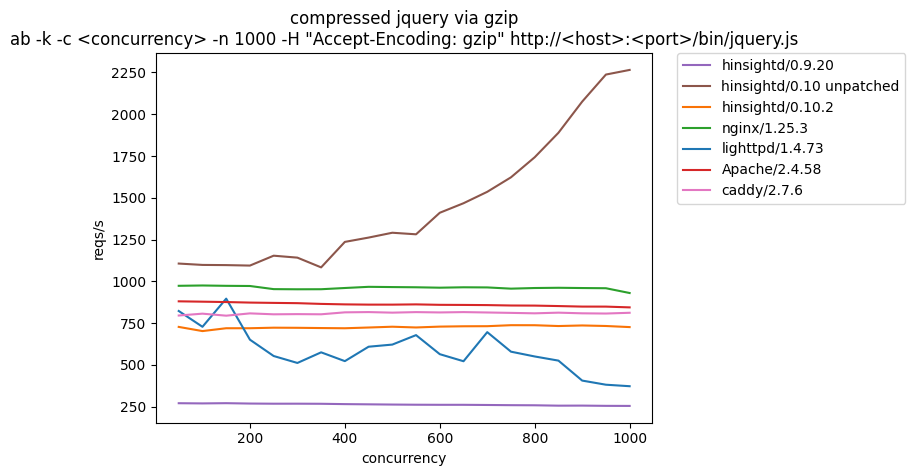

compressed jquery via gzip

Finally a worthy test of the new multithreaded capabilities of the new 0.10 branch. In the previous post we tried to benchmark ssl connections but that didn't give good results on a single computer so this is a replacement benchmark where we correctly test the server not the benchmark program.

Here we test dynamic gzip requests, the server should grab a medium sized file off the disk, apply the same cpu intensive algorithm and then respond. Because the time it takes to apply the gzip algorithm is several times longer than any other processing we're basically testing hdd bandwidth with a CPU bottleneck.

As expected most servers are showing horizontal lines. The weak results of hinsightd 0.9.20 are not worrying because we're running on a 4 core machine and as noted hinsightd/0.9 is single-threaded only but it's outputting straight horizontal lines which is good.

We had to enable multiple workers for lighttpd despite it being advised against otherwise the line wouldn't wiggle as much but it would be right next to the single-threaded hinsightd v0.9 line. Single threaded servers are really not suited to this kind of test.

And no the hinsightd/0.10 unpatched isn't 10 times faster than the other servers that's just a bug left in for reference.

| test | hinsightd/0.9.20 | hinsightd/0.10 unpatched | hinsightd/0.10.2 | nginx/1.25.3 | lighttpd/1.4.73 | Apache/2.4.58 | caddy/2.7.6 |

|---|---|---|---|---|---|---|---|

| ab -c 50 | 270.44 | 1106.35 | 727.16 | 973.17 | 822.39 | 880.28 | 795.4 |

| ab -c 100 | 269.26 | 1098.19 | 702.17 | 975.11 | 727.86 | 878.18 | 806.48 |

| ab -c 250 | 267.51 | 1153.42 | 722.49 | 953.28 | 552.99 | 870.89 | 802.6 |

| ab -c 500 | 262.42 | 1290.8 | 728.32 | 965.61 | 621.38 | 860.48 | 812.53 |

| ab -c 750 | 258.7 | 1622.57 | 737.46 | 956.14 | 578.91 | 855.3 | 811.1 |

| ab -c 1000 | 254.25 | 2265.3 | 726.06 | 930.04 | 372.22 | 844.06 | 811.74 |

Conclusions

Performance is very ticky to measure because you have to double check and doubt all of your results or you end up knowing less than you knew before you started. If you're interested in more you can check out parts I, II, III or IV of the benchmarks series.

Notes:

- hin no patch means commit d0ec626012a2c9bb1edbb4b5c1349cc9da582632 a version prior to fixing the bug mentioned in the post.

- tests were ran on a 4 core machine with no hyperthreading. hinsightd 0.10, nginx and lighttpd have 4 workers/threads enabled the rest are left at the default.

- tests are run several times for each concurrency level and only the highest result is taken into account.

- nginx config, lighttd config, caddy config, the apache2 config is too long to post but it's using worker mpm with ThreadsPerChild 64 before we were using event mpm with the default settings.

hinsightd web server

hinsightd web server